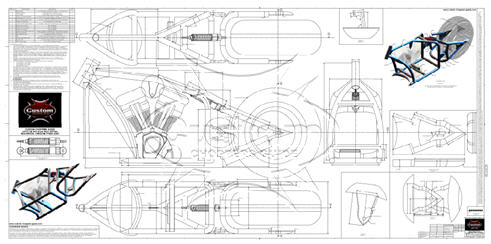

Chopper Frame Blueprints Pdf Files

Unlike most shops, our primary business is building motorcycle, chopper and bagger frames. We offer frames built to any specifications as well as our own line of production frames. We manufacturer Hardtail, Softail, Swingarm or bagger frames in many configurations you’re looking for. From pro-street to bobber to full blown choppers, we have a set-up to fit your next build. All our chopper frames and frame work is done with the finest seamless DOM tubing available and all TIG welded. Each frame or rolling chassis kit comes with an MSO, which you’ll need for titling

All of our frames are available with many options. If your looking for drop seat, curved tubing, custom down tubes, or a specific look, we’ve got you covered. And again, any of our frame models are available in either Rigid, Softail or Swingarm versions.

| Available Custom Frame Options |

The easiest frame to build is a traditional old school styled chopper since there aren’t any complicated bends or compound miters to cut so we decided to show the chassis fabrication process from A to Z as we do it.

| Popular Frame Models – All Frames Are Built Custom To Your Specs | |||||||||||||||||||||||||||||

|

|

| |||||||||||||||||||||||||||

|

|

| |||||||||||||||||||||||||||

|

|

| |||||||||||||||||||||||||||

|

|

| |||||||||||||||||||||||||||

Let’s say we’re interested in text mining the opinions of The Supreme Court of the United States from the 2014 term. The opinions are published as PDF files at the following web page http://www.supremecourt.gov/opinions/slipopinion/14. We would probably want to look at all 76 opinions, but for the purposes of this introductory tutorial we’ll just look at the last three of the term: (1) Glossip v. Gross, (2) State Legislature v. Arizona Independent Redistricting Comm’n, and (3) Michigan v. EPA. These are the first three listed on the page. To follow along with this tutorial, download the three opinions by clicking on the name of the case. (If you want to download all the opinions, you may want to look into using a browser extension such as DownThemAll.)

To begin we load the pdftools package. The pdftools package provides functions for extracting text from PDF files.

Next create a vector of PDF file names using the list.files function. The pattern argument says to only grab those files ending with “pdf”:

NOTE: the code above only works if you have your working directory set to the folder where you downloaded the PDF files. A quick way to do this in RStudio is to go to Session…Set Working Directory.

The “files” vector contains all the PDF file names. We’ll use this vector to automate the process of reading in the text of the PDF files.

The “files” vector contains the three PDF file names.

We’ll use this vector to automate the process of reading in the text of the PDF files.

The pdftools function for extracting text is pdf_text. Using the lapply function, we can apply the pdf_text function to each element in the “files” vector and create an object called “opinions”.

This creates a list object with three elements, one for each document. The length function verifies it contains three elements:

Each element is a vector that contains the text of the PDF file. The length of each vector corresponds to the number of pages in the PDF file. For example, the first vector has length 81 because the first PDF file has 81 pages. We can apply the length function to each element to see this:

And we’re pretty much done! The PDF files are now in R, ready to be cleaned up and analyzed. If you want to see what has been read in, you could enter the following in the console, but it’s going to produce unpleasant blocks of text littered with Character Escapes such as r and n.

When text has been read into R, we typically proceed to some sort of analysis. Here’s a quick demo of what we could do with the tmSita koka chiluka naa songs 2016. package. (tm = text mining)

First we load the tm package and then create a corpus, which is basically a database for text. Notice that instead of working with the opinions object we created earlier, we start over.

The Corpus function creates a corpus. The first argument to Corpus is what we want to use to create the corpus. In this case, it’s the vector of PDF files. To do this, we use the URISource function to indicate that the files vector is a URI source. URI stands for Uniform Resource Identifier. In other words, we’re telling the Corpus function that the vector of file names identifies our resources. The second argument, readerControl, tells Corpus which reader to use to read in the text from the PDF files. That would be readPDF, a tm function. The readerControl argument requires a list of control parameters, one of which is reader, so we enter list(reader = readPDF). Finally we save the result to an object called “corp”.

It turns out that the readPDF function in the tm package actually creates a function that reads in PDF files. The documentation tells us it uses the pdftools::pdf_text function as the default, which is the same function we used above. (?readPDF)

Now that we have a corpus, we can create a term-document matrix, or TDM for short. A TDM stores counts of terms for each document. The tm package provides a function to create a TDM called TermDocumentMatrix.

The first argument is our corpus. The second argument is a list of control parameters. In our example we tell the function to clean up the corpus before creating the TDM. We tell it to remove punctuation, remove stopwords (eg, the, of, in, etc.), convert text to lower case, stem the words, remove numbers, and only count words that appear at least 3 times. We save the result to an object called “opinions.tdm”.

To inspect the TDM and see what it looks like, we can use the inspect function. Below we look at the first 10 terms:

We see words preceded with double quotes and dashes even though we specified removePunctuation = TRUE. We even see a series of dashes being treated as a word. What happened? It appears the pdf_text function preserved the unicode curly-quotes and em-dashes used in the PDF files.

One way to take care of this is to manually use the removePunctuation function with tm_map, both functions in the tm package. The removePunctuation function has an argument called ucp that when set to TRUE will look for unicode punctuation. Here’s how we can use use it to remove punctuation from the corpus:

Now we can re-create the TDM, this time without the removePunctuation = TRUE argument.

And this appears to have taken care of the punctuation problem.

We see, for example, that the term “abandon” appears in the third PDF file 8 times. Also notice that words have been stemmed. The word “achiev” is the stemmed version of “achieve”, “achieved”, “achieves”, and so on.

The tm package includes a few functions for summary statistics. We can use the findFreqTerms function to quickly find frequently occurring terms. To find words that occur at least 100 times:

Free A Frame Blueprints

To see the counts of those words we could save the result and use it to subset the TDM. Notice we have to use as.matrix to see the print out of the subsetted TDM.

To see the total counts for those words, we could save the matrix and apply the sum function across the rows:

Many more analyses are possible. But again the main point of this tutorial was how to read in text from PDF files for text mining. Hopefully this provides a template to get you started.

For questions or clarifications regarding this article, contact the UVa Library StatLab: statlab@virginia.edu

Clay FordStatistical Research Consultant

University of Virginia Library

April 14, 2016