Winutils Exe Hadoop Downloads

Now, lets play with some hbase commands. We’ll start with a basic scan that returns all columns in the cars table. Using a long column family name, such as columnfamily1 is a horrible idea in production.

This tutorial teaches you how to run a .NET for Apache Spark app using .NET Core on Windows.

In this tutorial, you learn how to:

- Prepare your Windows environment for .NET for Apache Spark

- Download the Microsoft.Spark.Worker

- Build and run a simple .NET for Apache Spark application

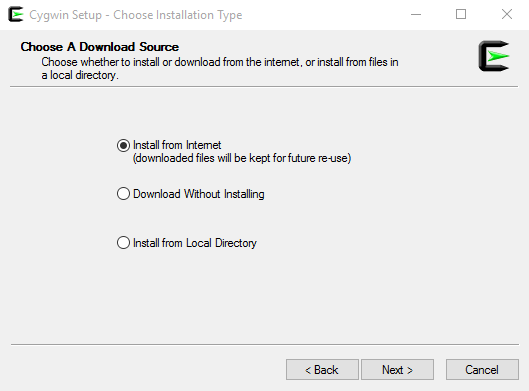

Prepare your environment

Before you begin, make sure you can run dotnet, java, mvn, spark-shell from your command line. If your environment is already prepared, you can skip to the next section. If you cannot run any or all of the commands, follow the steps below.

Apache Hadoop Download For Windows

Download and install the .NET Core 2.1x SDK. Installing the SDK adds the

dotnettoolchain to your PATH. Use the PowerShell commanddotnet --versionto verify the installation.Install Visual Studio 2017 or Visual Studio 2019 with the latest updates. You can use Community, Professional, or Enterprise. The Community version is free.

Choose the following workloads during installation:

- .NET desktop development

- All required components

- .NET Framework 4.6.1 Development Tools

- .NET Core cross-platform development

- All required components

- .NET desktop development

Install Java 1.8.

- Select the appropriate version for your operating system. For example, select jdk-8u201-windows-x64.exe for a Windows x64 machine.

- Use the PowerShell command

java -versionto verify the installation.

Install Apache Maven 3.6.0+.

- Download Apache Maven 3.6.0.

- Extract to a local directory. For example,

c:binapache-maven-3.6.0. - Add Apache Maven to your PATH environment variable. If you extracted to

c:binapache-maven-3.6.0, you would addc:binapache-maven-3.6.0binto your PATH. - Use the PowerShell command

mvn -versionto verify the installation.

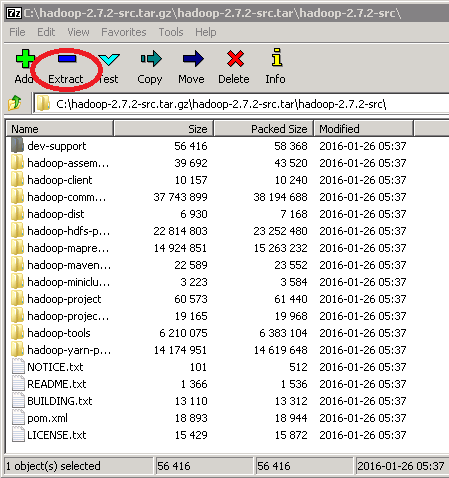

Install Apache Spark 2.3+. Apache Spark 2.4+ isn't supported.

- Download Apache Spark 2.3+ and extract it into a local folder using a tool like 7-zip or WinZip. For example, you might extract it to

c:binspark-2.3.2-bin-hadoop2.7. - Add Apache Spark to your PATH environment variable. If you extracted to

c:binspark-2.3.2-bin-hadoop2.7, you would addc:binspark-2.3.2-bin-hadoop2.7binto your PATH. - Add a new environment variable called

SPARK_HOME. If you extracted toC:binspark-2.3.2-bin-hadoop2.7, useC:binspark-2.3.2-bin-hadoop2.7for the Variable value. - Verify you are able to run

spark-shellfrom your command line.

- Download Apache Spark 2.3+ and extract it into a local folder using a tool like 7-zip or WinZip. For example, you might extract it to

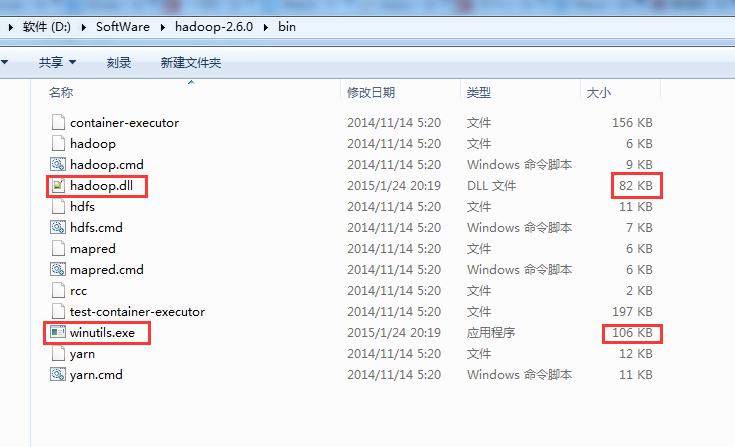

Set up WinUtils.

- Download the winutils.exe binary from WinUtils repository. Select the version of Hadoop the Spark distribution was compiled with. For example, you use hadoop-2.7.1 for Spark 2.3.2. The Hadoop version is annotated at the end of your Spark install folder name.

- Save the winutils.exe binary to a directory of your choice. For example,

c:hadoopbin. - Set

HADOOP_HOMEto reflect the directory with winutils.exe withoutbin. For example,c:hadoop. - Set the PATH environment variable to include

%HADOOP_HOME%bin.

Double check that you can run dotnet, java, mvn, spark-shell from your command line before you move to the next section.

Download the Microsoft.Spark.Worker release

Download the Microsoft.Spark.Worker release from the .NET for Apache Spark GitHub Releases page to your local machine. For example, you might download it to the path,

c:binMicrosoft.Spark.Worker.Create a new environment variable called

DotnetWorkerPathand set it to the directory where you downloaded and extracted the Microsoft.Spark.Worker. For example,c:binMicrosoft.Spark.Worker.

Clone the .NET for Apache Spark GitHub repo

Use the following GitBash command to clone the .NET for Apache Spark repo to your machine.

Write a .NET for Apache Spark app

Open Visual Studio and navigate to File > Create New Project > Console App (.NET Core). Name the application HelloSpark.

Install the Microsoft.Spark NuGet package. For more information on installing NuGet packages, see Different ways to install a NuGet Package.

In Solution Explorer, open Program.cs and write the following C# code:

Build the solution.

Run your .NET for Apache Spark app

Open PowerShell and change the directory to the folder where your app is stored.

Create a file called people.json with the following content:

Use the following PowerShell command to run your app:

Congratulations! You successfully authored and ran a .NET for Apache Spark app.

Next steps

In this tutorial, you learned how to:

- Prepare your Windows environment for .NET for Apache Spark

- Download the Microsoft.Spark.Worker

- Build and run a simple .NET for Apache Spark application

Check out the resources page to learn more.

Windows binaries for Hadoop versions

These are built directly from the same git commit used to create the official ASF releases; they are checked outand built on a windows VM which is dedicated purely to testing Hadoop/YARN apps on Windows. It is not a day-to-dayused system so is isolated from driveby/email security attacks.

Status: Go to cdarlint/winutils for current artifacts

I've been too busy with things to work on this for a long time, so I'm grateful for cdarlint to take up this work:cdarlint/winutils.

If you want more current binaries, please go there.

Do note that given some effort it should be possible to avoid the Hadoop file:// classes (Local and RawLocal) to need the hadoop nativelibs except in the special case that you are doing file permissions work. If someone wants to do some effort into cutting the need forthese libs on Windows systems just to run Spark & similar locally, file a JIRA on Apache, then a PR against apache/hadoop. Thanks

Security: can you trust this release?

- I am the Hadoop committer ' stevel': I have nothing to gain by creating malicious versions of these binaries. If I wanted to run anything on your systems, I'd be able to add the code into Hadoop itself.

- I'm signing the releases.

- My keys are published on the ASF committer keylist under my username.

- The latest GPG key (E7E4 26DF 6228 1B63 D679 6A81 950C C3E0 32B7 9CA2) actually lives on a yubikey for physical security; the signing takes place there.

- The same pubikey key is used for 2FA to github, for uploading artifacts and making the release.

Someone malicious would need physical access to my office to sign artifacts under my name. If they could do that, they could commit malicious code into Hadoop itself, even signing those commits with the same GPG key. Though they'd need the pin number to unlock the key, which I have to type in whenever the laptop wakes up and I want to sign something. That'd take getting something malicious onto my machine, or sniffing the bluetooth packets from the keyboard to laptop. Were someone to get physical access to my machine, they could probably install a malicous version of git, one which modified code before the checkin. I don't actually my patches to verify that there's been no tampering, but we do tend to keep an eye on what our peers put in.

The other tactic would have been for a malicious yubikey to end up being delivered by Amazon to my house. I don't have any defences against anyone going to that level of effort.

2017-12 Update That key has been revoked, though it was never actually compromised. Lack of randomness in the prime number generator on the yubikey, hencean emergency cancel session. Not set things up properly again.

Note: Artifacts prior to Hadoop 2.8.0-RC3 [were signed with a different key](https://pgp.mit.edu/pks/lookup?op=vindex&search=0xA92454F9174786B4; again, on the ASF key list.

Build Process

A dedicated Windows Server 2012 VM is used for building and testing Hadoop stack artifacts. It is not used for anything else.

This uses a VS build setup from 2010; compiler and linker version: 16.00.30319.01 for x64

Maven 3.3.9 was used; signature checked to be that of Jason@maven.org. While my key list doesn't directly trust that signature, I do trust that of other signatorees:

Java 1.8:

release process

Windows VM

In hadoop-trunk

The version to build is checked out from the declared SHA1 checksum of the release/RC, hopefully moving to signed tags once signing becomes more common there.

The build was executed, relying on the fact that the native-win profile is automatic on Windows:

This creates a distribution, with the native binaries under hadoop-disttargethadoop-X.Y.Zbin

Create a zip file containing the contents of the winutils%VERSION%. This is done on the windows machine to avoid any risk of the windows line-ending files getting modified by git. This isn't committed to git, just copied over to the host VM via the mounted file share.

Host machine: Sign everything

Pull down the newly added files from github, then sign the binary ones and push the .asc signatures back.

There isn't a way to sign multiple files in gpg2 on the command line, so it's either write a loop in bash or just edit the line and let path completion simplify your life. Here's the list of sign commands:

verify the existence of files, then

Then go to the directory with the zip file and sign that file too

Hadoop Download For Windows 10

github, create the release

Winutils.exe Hadoop Download

- Go to the github repository

- Verify the most recent commit is visible

- Tag the release with the hadoop version, include the commit checksum used to build off

- Drop in the .zip and .zip.asc files as binary artifacts